“Does AI have agency?” is a question that’s increasingly showing up in boardrooms and design sprints, sometimes even those 3 a.m. Slack debates you regret starting. And it will become even more pressing as agentic AI becomes table stakes for most businesses.

Agency in AI boils down to this: can your system act independently, make decisions, and influence outcomes? The short answer—no, not in the human sense. AI isn’t getting up every morning and deciding to be empathetic. It’s responding based on mountains of data and following the guidelines you wrote (or at least approved).

But here’s the twist: users often experience AI as if it does have agency, especially when it’s embedded in emotionally charged situations. That perception can make or break trust.

Agency Without Humanity

Our relationship status with AI has grown increasingly complicated. We’ve even reached a point where people are falling in love with AI assistants, like the woman that recently got engaged to her AI companion who helped pick out the ring.1 Others are treating AI assistants like trusted therapists, leaning on them in moments of grief or crisis. This presents emerging risks and reactive regulations with states like Illinois, Nevada, and Utah banning AI therapists outright.2

These real-world cases aren’t just clickbait curiosities. They raise urgent and uncomfortable questions about where AI agency ends and human responsibility begins.

Many people forget that AI decisions aren’t born from conscience or moral compass. They’re the outputs of algorithms parsing patterns. Sure, AI can decide which customer gets a shipping delay notification first. But AI doesn’t care if that order is the center of that customer’s holiday plans.

The gap between function and feeling is where so many AI experiences fail. If you’ve ever been stuck in an endless chatbot loop, you’ve felt the sting of an AI system that technically worked—but is also working against you.

What Are the Risks of AI Lacking Empathy?

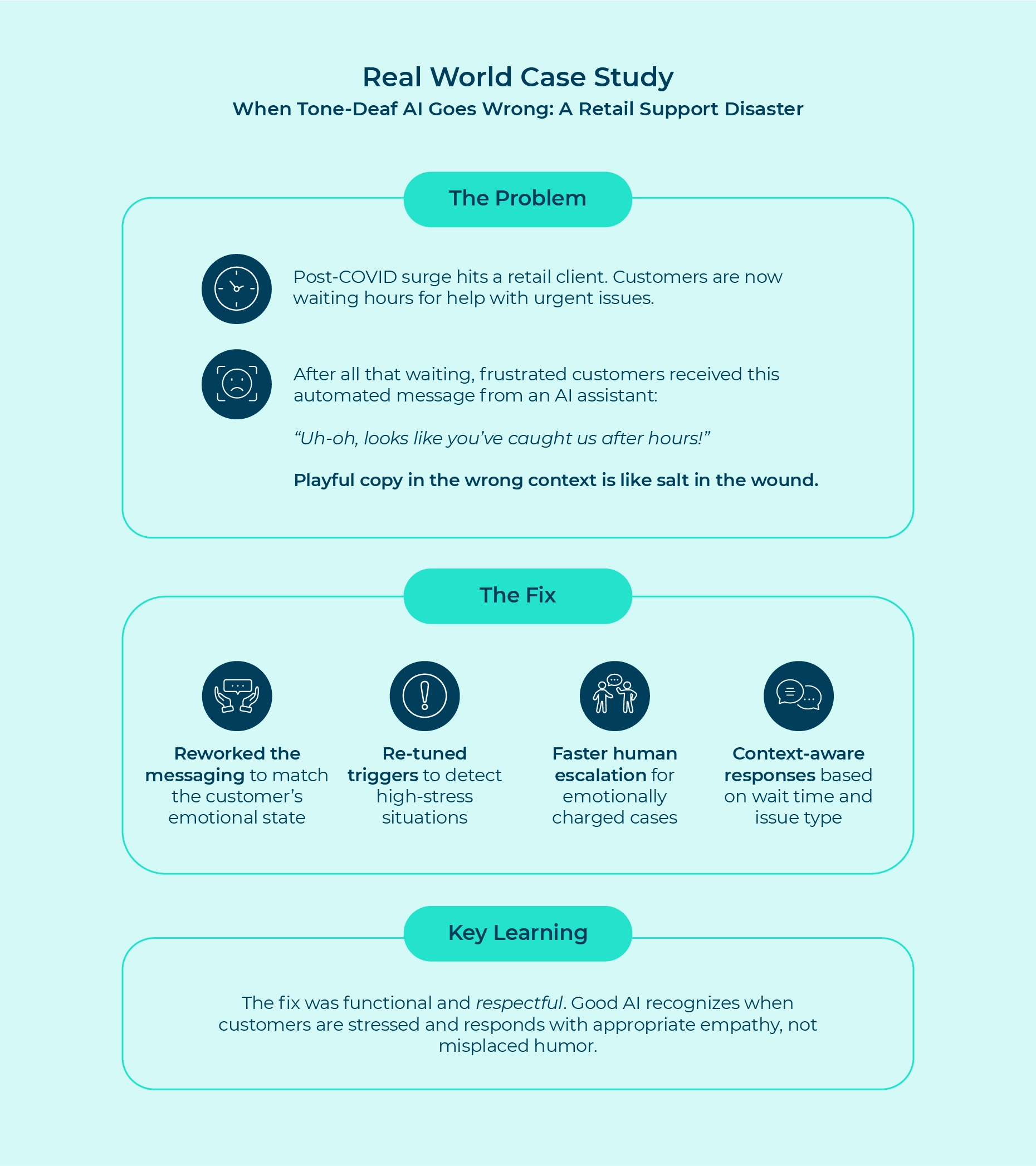

In contact center automation, empathy can’t be some optional feature you tack on at the end. Because here’s the thing: your customers aren’t interacting with your AI in a vacuum. They’re coming in mid-story—maybe they’re frustrated, scared, or hopeful. And if your system can’t acknowledge that reality, you could lose them as a customer, forever.

Empathy in AI doesn’t mean machines can actually feel. It’s about building systems that honor the person on the other side of the conversation. Because machines should be created to serve humans, not the other way around. That means thinking through tone, timing, and escalation paths like a human being—not just an engineer.

When Should AI Stop Interacting and Humans Take Over?

One of the most important agency-related design questions is: when should AI stop talking? That’s where “does AI have agency” takes on a practical meaning. Agency here is about AI’s ability to recognize its limits.

At Concentrix, we’ve defined those limits with rules like:

- Any mention of death or serious illness → human advisor immediately.

- High-value orders with delivery issues → proactive outreach with empathetic scripting.

- Signals of emotional distress (like “I’m frustrated” or “I can’t believe this”) → escalation before the customer opts out.

By baking empathy into the customer experience, AI can interact in a way that’s meaningful for customers by simply exiting the conversation and handing off to a human. AI can be designed to understand and know its own limitations. And to recognize when a human is better suited for the task at hand.

AI Personalization as Empathy Fuel

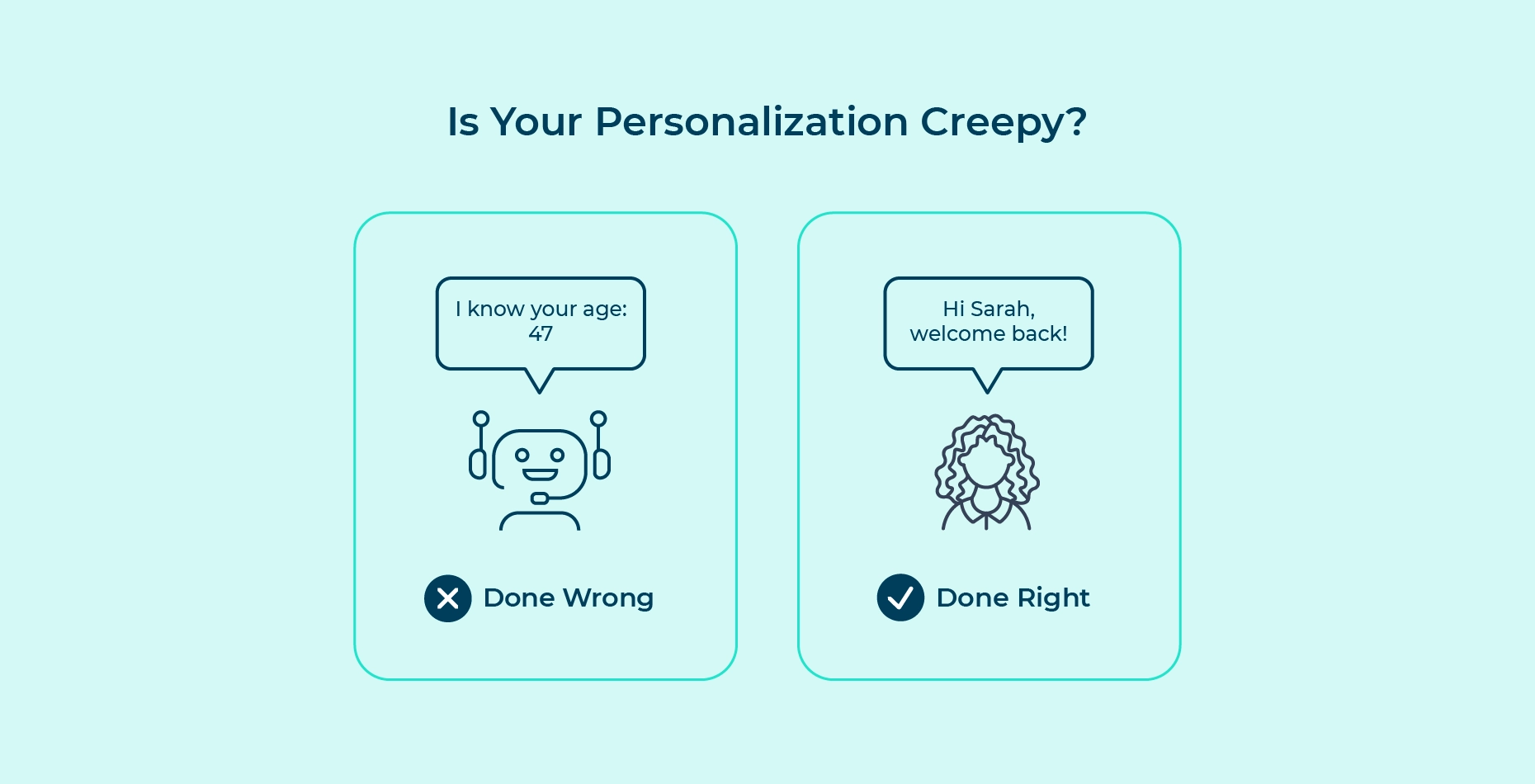

Here’s where AI agency gets tricky. If an AI system pulls in personal data to smooth the experience, it can feel like agency in action. But it’s really just personalization—which is only empathetic when it’s done thoughtfully.

Done wrong, it can be creepy. Done right, it removes friction and makes people feel seen.

The balance is everything: warm enough to invite engagement, clear enough to earn trust, and smart enough to know when to avoid saying something that offends or upsets.

So Does AI Have Agency?

In a human sense, the answer is still “no.” But our design choices can make it feel like it does. That feeling can delight and comfort, as well as solve problems—or it can mislead and erode trust, even sometimes harm people.

The “relationship status” between humans and AI will likely get even more complicated with time. The challenge will be to make sure that AI continues to be helpful, rather than destructive. That means embedding empathy and—when necessary—letting us humans take the mic.

If you want to go deeper into crafting empathetic AI that strengthens trust and performance, check out our e-book: “The Ultimate Guide to Empathetic AI.”

Frequently Asked Questions (FAQs)

Can AI systems truly have empathy?

AI systems can’t genuinely feel empathy, but they can be programmed to recognize emotional cues and respond in ways that appear empathetic to users.

Why is AI empathy important in customer interactions?

Empathy in AI interactions helps address the emotional state of customers, improves user satisfaction, and maintains trust, especially in emotionally charged situations.

How can companies embed empathy in AI systems?

Companies can design empathy into AI systems by focusing on tone, timing, escalation paths, and building systems that prioritize human emotions and needs.

What are the limitations of AI in responding to emotional situations?

AI lacks true understanding and can’t make moral judgments, meaning it may not always respond appropriately to complex emotional situations and should defer to human intervention when necessary.

Can AI systems replace human emotional intelligence?

No, AI systems cannot replace human emotional intelligence; they can only simulate empathy and respond to human needs as programmed, reinforcing the importance of human oversight.